June 1, 2020

by Oleg Dzhimiev

Basically, one need to direct the ip cam stream (mjpeg or rtsp) to a virtual v4l2 device which acts like a web cam and is automatically picked up by a web browser or a web cam application. This can be easily done by gstreamer or ffmpeg.

Quick setup

Install and create a virtual webcam

~$ sudo apt install v4l2loopback-dkms

~$ sudo modprobe v4l2loopback devices=1

Direct the stream

The options below are for gstreamer/ffmpeg and rtsp/mjpeg streams:

gstreamer:

# rtsp stream

~$ sudo gst-launch-1.0 rtspsrc location=rtsp://192.168.0.9:554 ! rtpjpegdepay ! jpegdec ! videoconvert ! tee ! v4l2sink device=/dev/video0 sync=false

# mjpeg stream

~$ sudo gst-launch-1.0 souphttpsrc is-live=true location=http://192.168.0.9:2323/mimg ! jpegdec ! videoconvert ! tee ! v4l2sink device=/dev/video0

ffmpeg:

# rtsp stream

~$ sudo ffmpeg -i rtsp://192.168.0.9:554 -fflags nobuffer -pix_fmt yuv420p -f v4l2 /dev/video0

# mjpeg stream

~$ sudo ffmpeg -i http://192.168.0.9:2323/mimg -fflags nobuffer -pix_fmt yuv420p -r 30 -f v4l2 /dev/video0

Finally

Start a video conference application, select the webcam from the menu (/dev/video0).

Test link: meet.jit.si

(more…)

July 20, 2018

by Oleg Dzhimiev

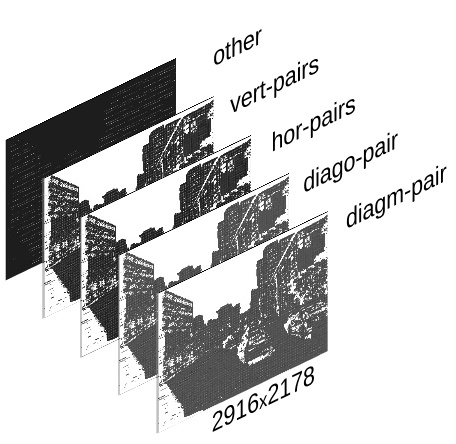

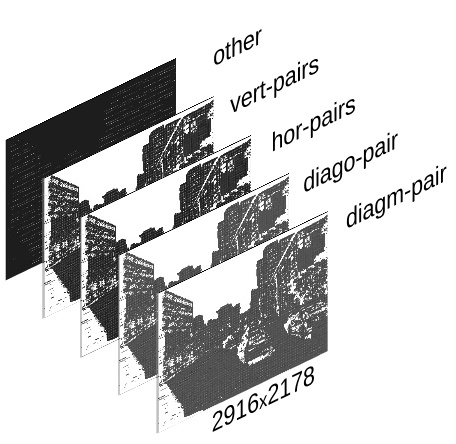

Fig.1 TIFF image stack

The input is a <filename>.tiff – a TIFF image stack generated by ImageJ Java plugin (using bioformats) with Elphel-specific information in ImageJ written TIFF tags.

Reading and formatting image data for the Tensorflow can be split into the following subtasks:

- convert a TIFF image stack into a NumPy array

- extract information from the TIFF header tags

- reshape/perform a few array manipulations based on the information from the tags.

To do this we have created a few Python scripts (see python3-imagej-tiff: imagej_tiff.py) that use Pillow, Numpy, Matplotlib, etc..

Usage:

~$ python3 imagej_tiff.py <filename>.tiff

It will print header info in the terminal and display the layers (and decoded values) using Matplotlib.

(more…)

April 20, 2018

by Oleg Dzhimiev

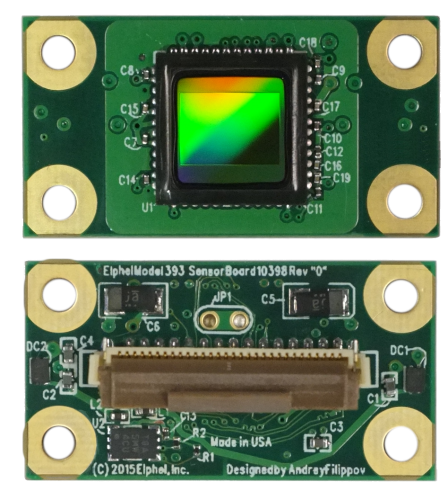

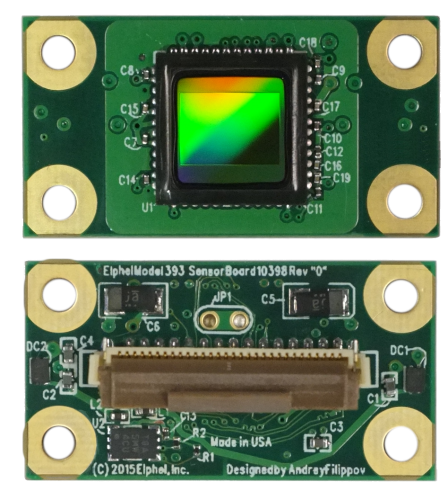

Fig.1 MT9F002

MT9F002

This post briefly covers implementation of a driver for On Semi’s MT9F002 14MPx image sensor for 10393 system boards – the steps are more or less general. The driver is included in the latest software/firmware image (20180416). The implemented features are programmable:

- window size

- horizontal & vertical mirror

- color gains

- exposure

- fps and trigger-synced ports

- frame-based commands sequence allowing to change settings of any image up to 16 frames ahead (didn’t need to be implemented as it’s the common part of the driver for all sensors)

- auto cable phase adjustment during init for cables of various lengths

(more…)

January 30, 2018

by Oleg Dzhimiev

Photo Finish: all cars driving in the same direction effect

Since 2005 and the older 333 model, Elphel cameras have a Photo Finish mode. First, it was ported to 353 generation, and then from 353 to 393 camera systems.

In this mode the camera samples scan lines and delivers composite images as video frames. Due to the Bayer pattern of the sensor the minimal sample height is 2 lines. The max fps for the minimal sample height is 2300 line pairs per second. The max width of a composite frame can be up to 16384px (is determined by WOI_HEIGHT). A sequence of these frames can be simply joined together without any missing scan lines.

Current firmware (20180130) includes a photo finish demo: http://<camera_ip>/photofinish

A couple notes for 393 photo finish implementation:

- works in JP4 format (COLOR=5). Because in this format demosaicing is not done it does not require extra scan lines, which simplified fpga’s logic.

- fps is controlled:

- by exposure for the sensor in the freerun mode (TRIG=0, delivers max fps possible)

- by external or internal trigger period for the sensor in the snapshot mode (TRIG=4, a bit lower fps than in freerun)

See our wiki’s Photo-finish article for instructions and examples.

December 20, 2017

by Oleg Dzhimiev

We have updated the Yocto build system to Poky Rocko released back in October. Here’s a short summary table of the updates:

|

before |

after |

| Poky |

2.0 (Jethro) |

2.4 (Rocko) |

| gcc |

5.3.0 |

7.2.0 |

| linux kernel |

4.0 |

4.9 |

Other packages got updates as well:

- apache2-2.4.18 => apache2-2.4.29

- php-5.6.16 => php-5.6.31

- udev-182 changed to eudev-3.2.2, etc.

This new version is in the rocko branch for now but will be merged into master after some transition period (and the current master will be moved to jethro branch). Below are a few tips for future updates.

(more…)

December 3, 2017

by Andrey Filippov and Oleg Dzhimiev

Multiple Subdomains for the Same Web Site

It is a common case when a company or organization uses multiple content management systems (CMS) and specialized web application to organize its web presence. We describe here how Elphel handles such CMS variety and provide the source code that can be customized for other similar sites.

(more…)

July 11, 2017

by Oleg Dzhimiev

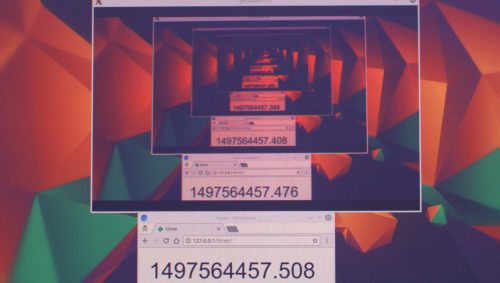

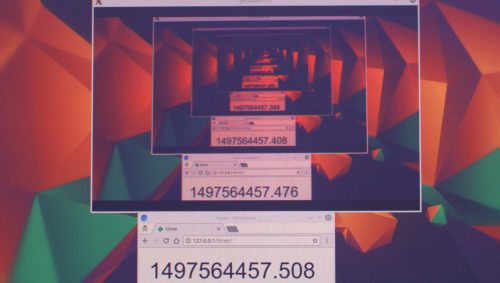

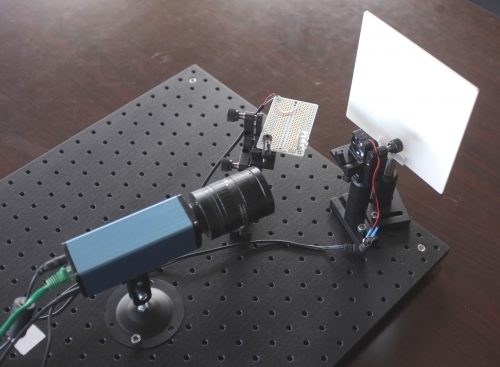

Fig.1 Live stream latency testing

Recently we had an inquiry whether our cameras are capable of streaming low latency video. The short answer is yes, the camera’s average output latency for 1080p at 30 fps is ~16 ms. It is possible to reduce it to almost 0.5 ms with a few changes to the driver.

However the total latency of the system, from capturing to displaying, includes delays caused by network, pc, software and display.

In the results of the experiment (similar to this one) these delays contribute the most (around 40-50 ms) to the stream latency – at least, for the given equipment.

(more…)

October 24, 2016

by Oleg Dzhimiev

Operation modes in conventional CMOS image sensors with the electronic rolling shutter

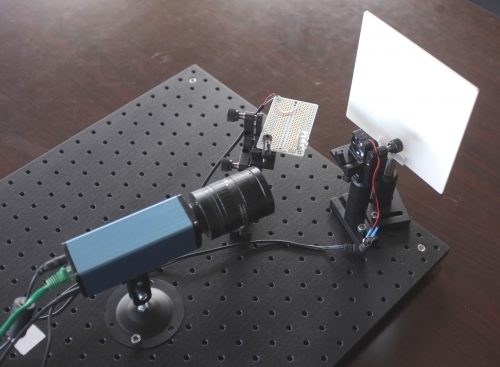

Flash test setup

Most of the CMOS image sensors have Electronic Rolling Shutter – the images are acquired by scanning line by line. Their strengths and weaknesses are well known and extremely wide usage made the technology somewhat perfect – Andrey might have already said this somewhere before.

There are CMOS sensors with a Global Shutter BUT (if we take the same optical formats):

- because of more elements per pixel – they have lower full well capacity and quantum efficiency

- because analog memory is used – they have higher dark current and higher shutter ratio

Some links:

So, the typical sensor with ERS may support 3 modes of operation:

- Electronic Rolling Shutter (ERS) Continuous

- Electronic Rolling Shutter (ERS) Snapshot

- Global Reset Release (GRR) Snapshot

GRR Snapshot was available in the 10353 cameras but ourselves we never tried it – one should have write directly to the sensor’s register to turn it on. But now it is tested and working in 10393s available through the TRIG (0x14) parameter.

(more…)

September 8, 2016

by Oleg Dzhimiev

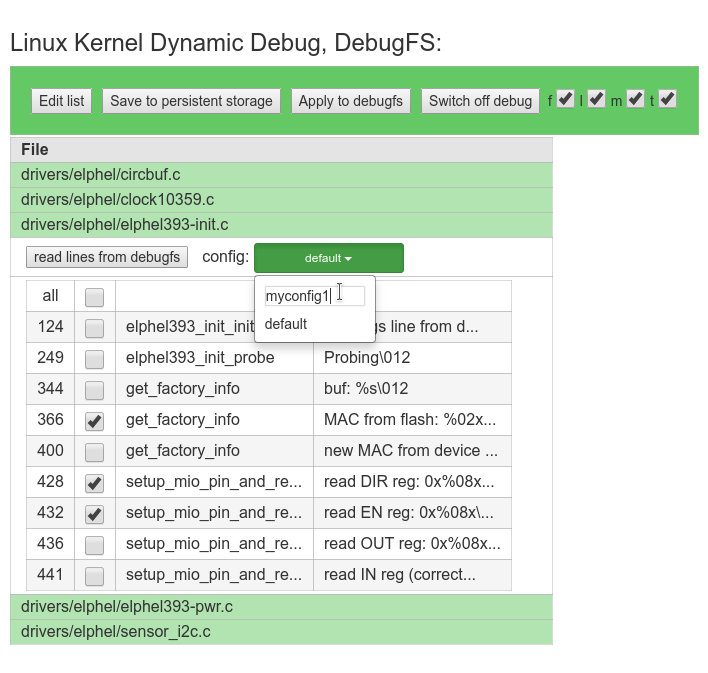

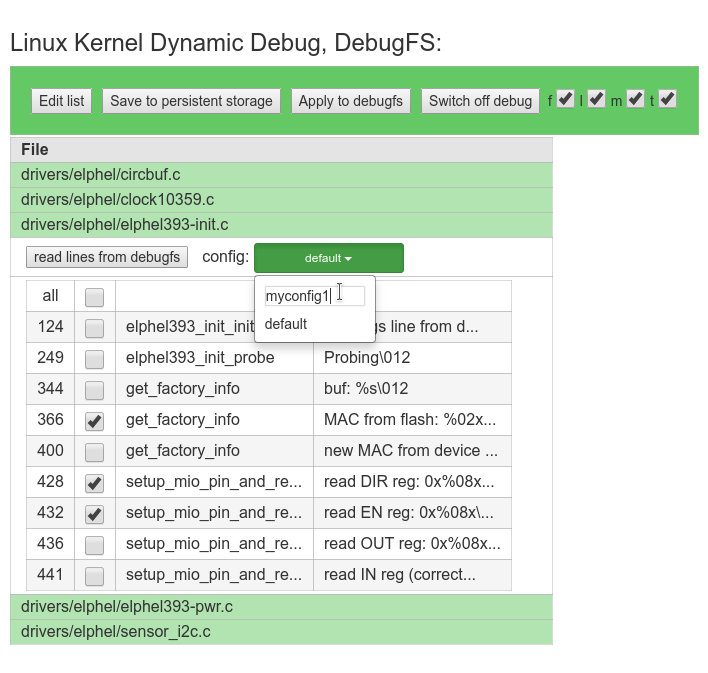

Along with the documentation there is a number of articles explaining the dynamic debug (dyndbg) feature of the Linux kernel like this one or this. Though we haven’t found anything that would extend the basic functionality – so, we created a web interface using JavaScript and PHP on top of the dyndbg.

Fig.1 debugfs-webgui

(more…)

March 18, 2016

by Oleg Dzhimiev

Overview

- Target board: Elphel 10393 (Xilinx Zynq 7Z030) with 1GB NAND flash

- U-Boot final image files (both support NAND flash commands):

- boot.bin – SPL image – loaded by Xilinx Zynq BootROM into OCM, no FSBL required

- u-boot-dtb.img – full image – loaded by boot.bin into RAM

- Build environment and dependencies (for details see this article) :

(more…)

Next Page »